OpsVerse Chronicles: Making of Katafraktos

A series which follows detailed, thematic, recounts of a beginner’s real-time firefighting incidents with Kubernetes (and cloud infrastructure in general) in their effort to reach the ideal of Katafraktos – well armored by practical experience.

Info

If Kubernetes is Greek for helmsman/pilot, then what do you call someone who safeguards the pilot? Greek: Katafraktos – fully armored. “Equites cataphractarii, or simply cataphractarii, were the most heavily armored type of Roman cavalry in the Imperial Roman army and Late Roman army. The term derives from a Greek word, κατάφρακτος katafraktos, meaning “covered over” or “completely covered” (see Cataphract).”

The Making of Katafraktos : Subduing a DevOps Cerberus

It was just another Friday afternoon when my mentor, Sat, and I met to upgrade our integration (think, staging) cluster – where we test prod release features – to the latest version. Since Kubernetes v1.19 was going to reach EOL status soon, we’d decided that it would be best to upgrade the integration EKS clusters sequentially up to v1.21 as soon as possible – the urgency amplified by the fact that it’s used by our team as a shared cluster for testing features dependent on the latest release. I thought it would be a fun learning experience for me to shadow him as he upgraded the cluster, and its respective nodes, to v1.21 using HashiCorp’s Infra-as-Code (IaC) tool called Terraform. Since we were upgrading our integration cluster, which hosts all of our team’s near-prod instances, there was little room for error as it would cost us valuable test data.

Tech Stack

What is Terraform?

Terraform is a cloud-agnostic IaC provider used to programmatically orchestrate changes to our clusters, such as increasing node count, dealing with permissions, or provisioning resources, therefore increasing transparency, repeatability, and auditability of infra changes.

What is EKS?

EKS stands for Elastic Kubernetes Service. It’s a managed K8s service offered by Amazon, similar to GKE (Google Kubernetes Engine), provided by Google Cloud, and Microsoft Azure’s AKS (Azure Kubernetes Service)

The Blueprint

The deliverables for our cluster upgrade were carefully defined, and it was a supposedly linear process of making changes to our Terraform codebase. That didn’t mean it was a job to be rushed, though, as one of the prerequisites was to resolve an unexpected PVC “Terminating” state by restoring data from our automated backups of VictoriaMetrics server data, which are stored in AWS S3 buckets; if this was overlooked, before going through with the upgrade, the Pods would have been force deleted during the upgrade and we would’ve lost a substantial amount of our team’s data.

Tech Stack

What is VictoriaMetrics?

VictoriaMetrics is a high-performance, scalable, open source time-series database which is used in the storage of metrics and other observability-related data.Meet the Cerberus: A Problematic Spawn

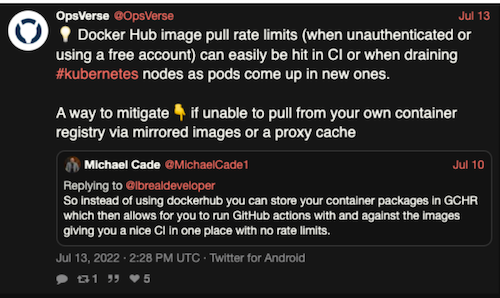

I remember vividly, early in the cordoning process, that Sat’s veteran intuition kicked in and he made a remark stating that he felt like it’s time to check in on the pods. Naturally he ran a kubectl command to check for the pods’ status and there it was, a metaphorical Cerberus with several ImagePullBackOff errors reared its heads. Without much delay, Sat and I quickly got to identifying the root cause: it was soon determined that the error was caused due to a rate limit being set on images being pulled from DockerHub. Just to elaborate and provide more context on this problem, this meant that quite a few of the Pods from the already cordoned nodes, which had kubelet pulling images from the public Docker registry, were rate limited and effectively rendered incapacitated, mid-upgrade.

Tech Stack

What is Harbor?

Harbor is an open source registry that secures artifacts with policies and role-based access control, ensures images are scanned and free from vulnerabilities, and signs images as trusted.

Since we already had some of the essential container images in our own registry, I asked if I could help with resolving the error. Sat stated that the solution that he was implementing was a temporary one, and since he wasn’t updating the templates in Git (we do GitOps) the Pod manifests would be susceptible to overwriting, so he suggested that I could get started on editing the integration instances’ templates to make sure that the next time the Pods were deleted in an upgrade they would directly be pulling the images from our own container registry referenced in the template files.

Focused Attack: Subduing the Cerberus

I took note of Sat’s instructions and got started on updating the templates, which required me to run the helm template command against each instance’s helm charts and make a list of all the container images that needed to be manually updated in the values file. Fortunately, our production releases referenced images from our own registry, so we could almost immediately update the values. However, our integration releases had some images not yet pushed out to production, which made verifying against the helm template a bit laborious.

By the time I was done with updating the template for the first instance, Sat came back with news that he’d successfully managed to update all the Pod manifests to pull from our own registry. All that was left to finish the upgrade to v1.21 was to manually cordon and drain each of the remaining nodes to avoid the additional expense of having a substantial amount of nodes in the cluster. It was already evening at this point, having started the upgrade process in the afternoon, and we’d been at this upgrade process for a couple hours now. Sat and I decided to sync up and discuss our progress before deciding to call it a day, as most of the ImagePullBackOff errors had been resolved and the Pods were back in a healthy state. All that was left to do was update the templates for the remaining releases as a future defense during an upgrade to the next version.

XP Gained

Basically, the root cause of the problem was due to the overlooking of seemingly inconsequential (quite the opposite) info: the existence of rate limits on public Docker repos and the Pod-references to those images. Although we had our own container registry, with the essential image references being used by our prod-cluster, in order to subvert any potential problems associated with rate limiting, we’d missed updating some of the newer image reference locations in our constantly changing helm charts which led to a series of ImagePullBackOff errors.

At the end of the whole ordeal, I became more knowledgeable about the entire upgrade process and am now cognizant of the existence of rate limits. Although this problem felt stressful and tiring in that heated period, at the end of it we were exhilarated for having gotten past a problematic internal incident in a relatively short time, and in the process gained considerable firefighting experience.